The main goal of our work was the investigation of possible significant correlations between different type of environmental pollution, socio-economic factors, clinical comorbidities and AD mortality in the Italian provinces from 2015 to 2019 through a procedure based on machine learning techniques. Our pipeline is summarized in Fig. 1.

To select the most performing algorithms between Linear Model (LM), and Random Forest (RF) we applied a common five-fold cross validation procedure. Then we used the feature set selected by wrapper method Boruta to feed this learning model for the prediction of SMR. Also to verify the robustness of our results we employed a complex networks tool used in previous works to analyze co-expression networks. Finally, we applied a feature importance procedure to outline the role of each feature at wider global and finer local scales with Random Forest internal functionalities and Shapley (SHAP) values algorithm respectively.

Flowchart of the implemented analysis. After a data collection and a pre-processing phase, we selected the best model (among LM and RF) to forecast the SMR for the 107 Italian provinces between 2015 and 2019. Then, we developed a feature importance procedure to improve the performance of the selected algorithm and to measure the importance of each variable in the model.

Data collection and preprocessing

In this work we considered 31 indicators that we grouped into 5 categories: Air Pollution, Soil Pollution, Urban Environment, Socio-economic Data and Other Pathologies. The full list of such input variables, along with descriptions and related data sources, is reported in the Supplementary Information. We collected data for the years 2015-2019 of each Italian province from public repositories of Italian National Institute of Statistics (ISTAT) and Regional Environmental Protection Agencies (ARPAs). Only the feature “Life Quality Index” is provided by “Il Sole 24 Ore”. This index is calculated considering 90 different indicators. As for the clinical comorbidities we chose the mortality rate of some diseases connected to AD according to several studies, namely diabetes, ischemia, pathologies related to the circulatory, digestive and brain systems. Among Air Pollution data we also considered Air Quality Index (AQI) provided by http://moniqa.dii.unipi.it/. This index is obtained by dividing the measurement of the pollutant, by its reference limit, established by the Italian Legislative Decree 155/201036.

All features used in this work are available at https://github.com/OneHealthBari/Italian-provinces-data.git

Standardized AD mortality

ISTAT does not provide the Standardized Mortality Ratio (SMR) of AD for the 107 Italian provinces. Therefore, we computed it through the following definition37,38:

$$begin{aligned} SMR = frac{O_m}{E_m}, end{aligned}$$

(1)

where (O_m) and (E_m) are the observed and the expected number of death by cause respectively. (E_m) is defined as the weighted sum of age-specific death rates of the reference population (R_i^M) per the population of a given locality and given age (n_i):

$$begin{aligned} E_m=sum _{i=1}^{I} R_i^M times n_i ,end{aligned}$$

(2)

(R_i^M) is obtained dividing the number of deaths by age and cause of the reference population (M_i) with the age-specific reference population size (N_i):

$$begin{aligned} R_i^M=frac{M_i}{N_i} ,end{aligned}$$

(3)

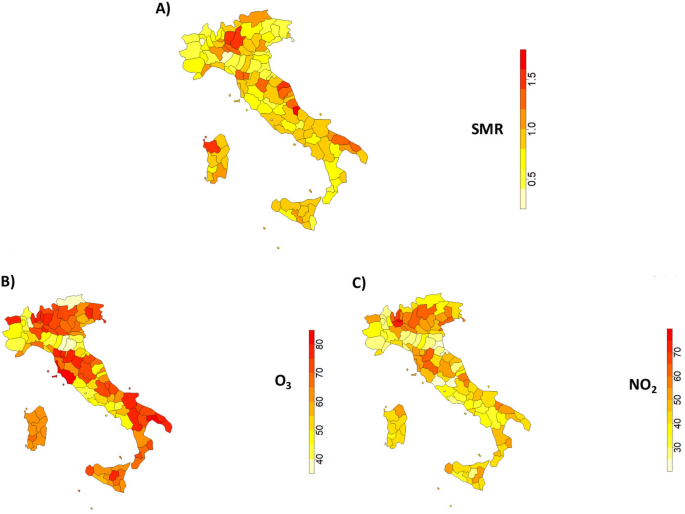

For a given province a SMR value higher than 1, means that the mortality incidence exceeds the reference one (we considered the Italian value as reference). Figure 2 shows the distribution of the value of the SMR (Panel A) and the concentrations of (O_3) (Panel B) and (NO_2) (Panel B) by province, averaged for the considered time window (2015–2019). Our computation of SMR is available on39 . More information about SMR computation is reported on40.

Panel (A) shows the average standardized mortality rate distribution for Alzheimer’s disease within Italian provinces; Panel (B) and Panel (C) show the average average distribution of (O_3) and (NO_2) concentrations ((mu g/m^3)) at Italian provincial level. This image has been created with the software package “sf” of R 4.2.241.

The study of AD is a very complex issue to deal with in terms of time, in fact we do not know exactly when the disease started, the onset could even go back decades before the patient’s death. Therefore, due to the peculiarity of the analyzed pathology and the impossibility of predicting the exact moment in which it began to develop, in our analysis we focused exclusively on the spatial characterization of the data. The idea is therefore to eliminate the temporal component from the analysis, and to study the spatial correlations between SMR and pollution, socio-economic and health data. We assumed that there have been no substantial changes in the spatial distributions of input data over the considered years.

Feature selection procedure

We applied a feature selection procedure relied on the wrapper method Boruta42 with the aim to improve the performance of our selected learning model. This represents a common and extensively applied strategy in machine learning analysis. It involves an initial step of selecting features that optimize model performance using a wrapper algorithm. Subsequently, only the most informative features are fed into the algorithm as input to reduce noise. This approach helps to mitigate the potential pitfalls associated with standard machine learning applications, such as overfitting and underfitting. Boruta is a robust method, based on Random Forest, that reduces noise and correlated features through the randomization of the training set. For each original feature, the algorithm produces a synthetic one, called shadow, built by randomly mixing the values of each original indicator. With the new dataset (original features and shadows) Boruta trains a RF algorithm in order to compute, after a number of independent shuffles, the importance of both original and artificial variables. Finally only the features that are statistically more important than their respective shadow counterparts are selected. In particular, a permutation procedure is used to validate the role that the Random Forest (RF) algorithm assigns to the features and thus increase the robustness of the method. Shadow attributes are used as reference values to determine the importance of the features. When synthetic features have an importance that closely matches the corresponding best shadow features, it is challenging for Boruta to make decisions with the desired confidence. Boruta is intentionally designed to select all features that are relevant to the prediction of the outcome variable and that minimise prediction error. The specific steps that Boruta performs are as follows43:

-

Permute each feature (X_j) to create a shadow feature (X_j^{(s)}).

-

Build a Random Forest model using both the original and shadow features.

-

Calculate the importance of each feature (X_j) and (X_j^{(s)}) by Mean Decrease Accuracy. Z-score is then calculated from the ratio between the mean accuracy loss and the standard deviation of the same distribution.

-

Identify of the maximum Z-score among the shadow attributes (MZSA).

-

Declare (X_j) as important for a single run if its Z score exceeds the Z score of MZSA.

-

Perform a two-sided statistical test for all attributes assuming the null hypothesis that the importance of the variables is equal to the maximum importance of MZSA. For each characteristic (X_j), the algorithm records how often in M runs the importance of (X_j) exceeds the MZSA (a hit is registered for the variable). The expected number of hits, following a binomial distribution with (p =) (q =) 0.5, is (E(M) = 0.5M) with a standard deviation (S = sqrt{0.25M}). Subsequently, (X_j) is categorized as important when the number of hits significantly exceeds E(M) and as unimportant when the number of hits significantly falls below E(M).

-

Repeat the preceding steps for a predetermined number of iterations, or continue the process until all attributes are appropriately tagged.

The entire feature selection procedure was carried out within a 5-fold cross-validation framework as described below.

Learning framework

We implemented a learning framework to forecast the SMR at provincial level. We fed our models with the features selected by Boruta. We started with a linear hypothesis and then we used a machine learning approach based on Random Forest (RF)44 to improve the model performances. Multiple linear regression is one of the most basic and used statistical models. This model investigates, under a linear hypothesis, the relationship between a dependent variable (y), some independent variables ((x_i)) and their interactions:

$$begin{aligned} y = beta _0 + beta _1x_1 + beta _2x_2 +… beta _nx_x + eta, end{aligned}$$

(4)

where (beta _0) is the intercept value, (beta _i) are the regression coefficients to estimate, (eta) is the model error and n i the number of features selected by Boruta.

RF is an algorithm composed by an ensemble of binary classification trees (CART). Ensemble learning refers to the methodology of utilizing multiple models that are trained on the same dataset. The final output is determined by averaging the results produced by each individual model. This approach aims to achieve a more potent and robust predictive or classification result by leveraging the diverse perspectives and strengths of multiple models44. RF is a supervised machine learning model widely used because is suitable for modeling multimodal data and easy of tuning with on only two parameters to set: the number of randomly selected features at each node F , and the number of trees of the forest D. Furthermore RF is very robust against overfitting issue thanks to a training phase based on a bootstrap process and a feature randomization procedure during which the forest is developed. Thanks to the use of decision trees, the Random Forest (RF) algorithm is able to capture non-linear relationships present in the input features unlike the linear model. Another important functionality of RF is the ability to assess the importance of each variable used in the model through an internal feature importance procedure. In our work we trained RF model with a dataset composed by 107 Italian provinces and 31 socio-economic, health and pollution indicators. Furthermore, we used the mean decrease impurity as feature importance method, and a RF configurations with M = 600 trees and F = S/3, where S is the number of input features.

We applied a 5-fold cross validation (CV) framework, repeated 100 times, to further increase the robustness of our procedure. In the same way, RF overall feature importance is computed by averaging over 100 CVs.

We evaluated the performance of models using both the linear correlation coefficient between predicted and actual values and the root mean absolute error (MAE), defined as:

$$begin{aligned} MAE =frac{1}{n} sum _{i=1}^{N} left| A_i – P_iright|, end{aligned}$$

(5)

where (A_i) is the actual value and (P_i) is the predicted value.

Complex networks tool

To verify the robustness of our findings we implemented a complex network approach. A complex network is a geometric model consisting of points (nodes) and lines (links) that symbolise the relationships between the elements within a complex system. The complex network approach is widely used in the study of complex systems because it provides information about the behaviour of the system through the abstraction of the network structure.

Our aim was to evaluate whether adding further confounding features to the ML model described in Sect. “Learning framework”, our results remain constant. To do this we have created the network of Italian provinces. Starting from the dataset described in Sect. “Data collection and preprocessing” (107 provinces and 31 independent indicators) we build a network in which the nodes are represented by the Italian provinces. From this network, firstly we extracted some network features (4 new variables), able to capture the connections between different provinces and to provide additional spatial information to the model. Then we added the new features to the 31 indicators previously used and repeated the Machine Learning procedure described in the previous section.

In order to create the adjacency matrix we computed Spearaman’s correlation between each province, which is defined by an array of the 31 features described in Sect. “Data collection and preprocessing”. We used the Spearman’s correlation for two main reasons: (i) outliers were present in analyzed dataset45; the sample size was quite small46. Given provinces i and j, we computed (d_{i,j}), an element of the Spearman correlation matrix D, as the absolute value of the correlation coefficient (r_{i,j}) between the indicator values of province i ((x_i)) and province j ((x_j)), for the (N = 31) indicators:

$$begin{aligned} d_{i,j}=|r_{i,j}|=left| frac{sum _{a=1}^{N} (R(x_{i,a}) – R(bar{x}_i))sum _{b=1}^{N} (R(x_{j,b}) – R(bar{x}_j))}{sqrt{sum _{a=1}^{N} (R(x_{i,a}) – R(bar{x}_i))^2sum _{b=1}^{N} (R(x_{j,b}) – R(bar{x}_j))^2}}right|, end{aligned}$$

(6)

where (bar{x}_i) and (bar{x}_j) are the mean province i and province j indicator values across all the used indicators and (R(x_{i,a})) the rank variable.

Adjacency matrix and information entropy

Starting from the correlation matrix D with the elements (d_{i,j}), we computed the adjacency matrix C of elements (c_{i,j}) by means of a hard thresholding procedure:

$$begin{aligned} c_{i,j}={left{ begin{array}{ll} 1, &{} text {if} ,d_{i,j}ge th ,text {and} ,ine ,j, \ 0, &{} text {otherwise}, end{array}right. } end{aligned}$$

(7)

where th indicates the optimal threshold that we selected to maximize the Shannon entropy based on Freeman’s betweenness centrality, a topological property of the network. So, we choose the network configuration that maximizes the entropy of betweenness distribution. The betweenness of node (province) i in a network with G elements (provinces) is defined as:

$$begin{aligned} b_i=sum _{ine jne k}^{G}frac{n_{jk}(i)}{n_{jk}}. end{aligned}$$

(8)

where (n_{jk}(i)) is the number of geodesics (the shortest path connecting two nodes) between node i and node j that pass trough node i and (n_{jk}) is the number of geodesics between node j and node k.

For each value of th, we got a different adjacency matrices (C^{(th)}) and compared the Shannon entropy:

$$begin{aligned} H_{C^{(th)}}=-sum _{i=1}^G b_i^{(th)} log _2(b_i^{(th)}) end{aligned}$$

(9)

where (b_i^{(th)}) is the betweenness of node (province) i in the network defined by adjacency matrix (C^{(th)}).

The hard threshold analysis implemented in this work is an approach proposed in previous articles for the study of gene co-expression networks47,48,49.

Network centrality features

We calculated some quantities related to the intensity of the node connections and the weight distribution in order to be able to study the same nodes as their interaction changes. For each province in the network we computed the betweenness and the degree defined as the amount of connections of the node i:

$$begin{aligned} k_{i}=sum _{j=1}^{G}c_{ij}, end{aligned}$$

(10)

Along with these two measurements we also evaluated the eigenvector centrality that quantifies a node’s importance while giving consideration to the importance of its neighbors and the closeness centrality of province i in a network with N nodes:

$$begin{aligned} cl_{i}=frac{N-1}{sum _{j=1}^{N-1}s_{ij}}, end{aligned}$$

(11)

where (s_{ij}) is the shortest-path distance between i and j.

Explainable artificial intelligence and Shapley values

The main purpose of Explainable Artificial Intelligence (XAI) is to increase transparency and interpretability of Machine and Deep Learning methods50,51,52. XAI refers to a set of techniques that combines a number of properties of AI models such as informativeness, uncertainty estimation, generalization and transparency53,54. In our analysis, we implemented the SHAP local explanation method to evaluate the role of each feature in the Random Forest model. Unlike the feature importance evaluated entirely by Random Forest which provides global information of the machine learning algorithm on the whole training set, SHAP gives the contribution of each feature in the prediction of the single observation. The SHAP algorithm is based on cooperative game theory55,56 and the concept of the Shapley (SHAP) values. Given all possible feature subsets F of the total feature set S ((F subseteq S)) for a feature j the SHAP value is evaluated as the difference between two model outputs, the first obtained including that specific specific feature, the second without. The SHAP value of the j-th feature for the observation x is measured through the addition of the j-th feature to all possible subsets,

$$begin{aligned} SHAP_j(x) = sum _{Fsubseteq S – {j}} frac{|F|!(|S|-|F|-1)!}{|S|!} [f_{x}(F cup {j})-f_{x}(F)], end{aligned}$$

(12)

where |F|! is the number of feature permutations which precede the j-th feature; ((|S|-|F|-1)!) is the number of feature permutations that follow the j-th feature value; |S|! represents the total number of permutations of features; (f_{x}(F)) indicates the model prediction f for the sample x, considered a subset F without the j-th feature; (f_{x}(F cup {j})) is the output of the same model including the j-th feature55. In our analysis we computed the mean SHAP values after a 5-fold CV, repeated 100 times for each considered year.

Data processing and statistical analyses were performed in R 4.2.241 and Python 3.9.

Rohit Malhotra is a medical expert and health journalist who offers evidence-based advice on fitness, nutrition, and mental well-being. His articles aim to help readers lead healthier lives.